15 Core Ideas Shaping the Future of AI—and Why They Matter Today

15 Core Ideas Shaping the Future of AI—and Why They Matter Today

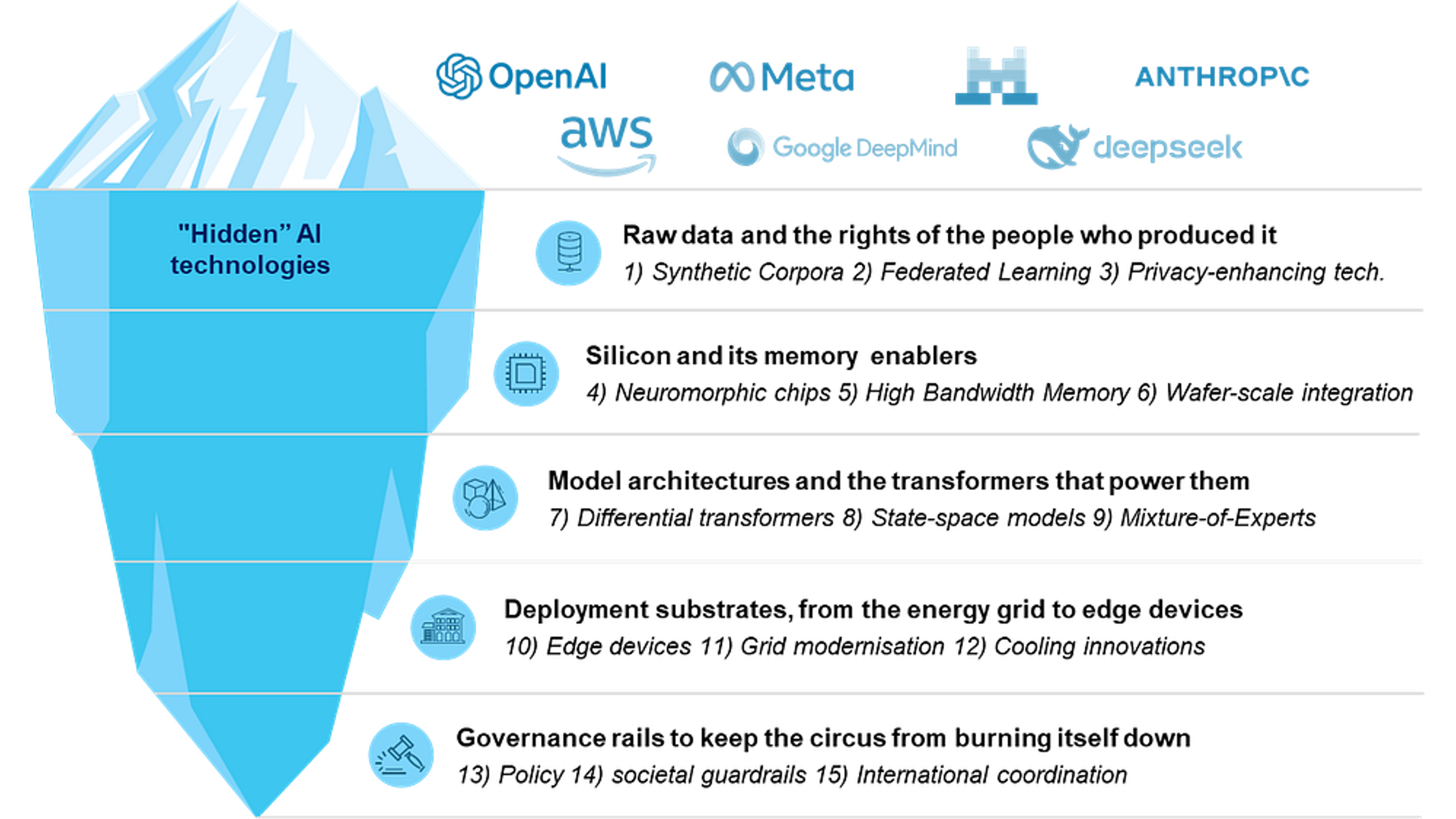

The landscape of Artificial Intelligence (AI) is evolving at an unprecedented pace, driven by a constellation of interconnected technological advancements and a growing awareness of foundational ethical considerations. At revWhiteShadow, we delve deep into the seminal concepts that are not merely shaping AI’s trajectory but fundamentally redefining its potential. We believe that understanding these core ideas is crucial for navigating the complexities of AI’s present and preparing for its transformative future. While the discourse often focuses on the end goal of Artificial General Intelligence (AGI), it is vital to recognize that AGI itself is not an isolated breakthrough, but rather the culmination of a sophisticated relay race of interlocking technologies. Furthermore, the very fuel that powers these advancements—raw data—and the fundamental rights of the individuals who generate it, are proving to be the linchpins of success and ethical deployment. The very infrastructure of the internet, as we know it, may soon prove insufficient for the monumental demands of true AGI, prompting urgent reassessment by regulators worldwide regarding data privacy and ownership.

The Interlocking Relay Race: Building Blocks of Artificial General Intelligence

The pursuit of Artificial General Intelligence (AGI)—AI capable of understanding, learning, and applying knowledge across a wide range of tasks at a human or even superhuman level—is a monumental undertaking. It is essential to disabuse ourselves of the notion that AGI will be a singular, monolithic invention. Instead, we see AGI as the emergent property of a complex, collaborative ecosystem of specialized AI technologies, each building upon and enhancing the capabilities of the others. This is precisely why we conceptualize it as an interlocking relay race. Each technological component represents a crucial baton, passed from one specialized domain to another, progressively building towards a more comprehensive and generalized intelligence.

Advancements in Deep Learning Architectures: The Foundation Layers

At the base of this relay are the advanced deep learning architectures. Innovations in Convolutional Neural Networks (CNNs) for image recognition, Recurrent Neural Networks (RNNs) and their descendants like Long Short-Term Memory (LSTM) networks for sequential data processing, and more recently, the revolutionary Transformer architecture, have laid the groundwork. Transformers, with their attention mechanisms, have unlocked unprecedented capabilities in natural language processing (NLP) and are now proving their versatility across diverse modalities. The continuous refinement of these architectures, focusing on efficiency, scalability, and the ability to learn from less data, is a critical step in the relay.

The Rise of Generative Models: Creating and Innovating

Following these foundational layers are sophisticated generative models. Techniques like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) have moved beyond mere pattern recognition to actively create novel content, from realistic images and text to complex molecular structures. This ability to generate and synthesize is a significant leap, moving AI closer to creative problem-solving. The ongoing development in diffusion models represents another powerful iteration, enabling high-fidelity content generation with greater control and diversity.

Reinforcement Learning and Complex Decision-Making: Learning Through Interaction

Crucial to AGI’s adaptability is advanced reinforcement learning (RL). RL algorithms, which enable agents to learn optimal behaviors through trial and error in dynamic environments, are becoming increasingly sophisticated. The ability to learn from interaction, to adapt strategies based on rewards and penalties, is paramount for AI systems that need to navigate unpredictable real-world scenarios. Breakthroughs in areas like multi-agent reinforcement learning and offline RL are extending its applicability and robustness.

Transfer Learning and Meta-Learning: The Pace Setters

To accelerate the learning process and reduce data dependency, transfer learning and meta-learning are indispensable. Transfer learning allows models trained on one task to adapt to a related but different task with minimal retraining, effectively reusing learned knowledge. Meta-learning, or “learning to learn,” aims to equip AI systems with the ability to quickly adapt to new tasks and environments with few examples. These techniques act as significant pace-setters in the relay, drastically reducing the time and resources needed to develop new AI capabilities.

Explainable AI (XAI) and Trustworthiness: The Ethical Baton

As AI systems become more complex and their decisions more impactful, the need for explainable AI (XAI) and inherent trustworthiness becomes paramount. This is not just a technical challenge but an ethical imperative. The ability to understand why an AI made a particular decision is crucial for debugging, accountability, and building user trust. The development of techniques that provide transparent insights into AI’s reasoning processes is a critical baton that must be carried responsibly throughout the development of AGI.

The Indispensable Role of Raw Data and Individual Rights

The engine that drives all these technological advancements is raw data. Without vast quantities of diverse and high-quality data, even the most sophisticated AI algorithms would falter. However, the origin and stewardship of this data are becoming increasingly critical considerations, intrinsically linked to the rights of the people who produced it.

Data as the New Oil: Fueling AI’s Engine

We can no longer simply view data as an inert resource. Data is the lifeblood of modern AI, particularly for the data-hungry deep learning models that underpin current breakthroughs. The sheer volume, variety, and velocity of data generated today are what allow AI to learn complex patterns, make accurate predictions, and perform tasks that were once the exclusive domain of human intelligence. From customer transactions and social media interactions to sensor readings and scientific experiments, this raw data is the raw material from which intelligent systems are forged.

Quality Over Quantity: The Nuance of Data Curation

While quantity is important, the quality of data is increasingly recognized as the true differentiator. Biased, incomplete, or inaccurate data will inevitably lead to biased, incomplete, and inaccurate AI systems. This necessitates rigorous data curation, cleaning, and labeling processes. The meticulous effort invested in ensuring data integrity and representativeness directly impacts the reliability and fairness of the resulting AI models. This is where the human element, the understanding of context and nuance, becomes irreplaceable in the AI development lifecycle.

The Provenance and Ownership of Data: A Developing Frontier

A profound shift is occurring in our understanding of data provenance and ownership. For too long, data has been treated as a commodity to be collected and utilized with minimal consideration for its originators. However, as the value and impact of data become more apparent, questions of who truly owns the data generated by individuals, and what rights they have over its use, are moving to the forefront. This involves recognizing that the data individuals generate through their online activities, purchases, and interactions is an extension of their digital identity and labor.

Ethical Data Sourcing and Consent: The Foundation of Trust

The ethical sourcing of data, underpinned by informed consent, is becoming non-negotiable. As AI systems become more pervasive, it is imperative that individuals are aware of how their data is being collected, used, and shared, and that they have the agency to control this process. This requires transparent data policies, clear opt-in mechanisms, and robust frameworks for managing consent. Building trust in AI fundamentally depends on respecting the privacy and autonomy of individuals who contribute to the data ecosystem.

The Value of Data Contribution: Rewarding the Source

A more equitable future for AI will likely involve mechanisms that recognize and potentially reward data contributors. If individuals are the source of the value that powers AI, there is a growing ethical argument for their participation in the benefits derived from that data. This could manifest in various forms, from direct compensation to greater control over data usage and access to AI-powered services. This shift from passive data generation to active data stewardship is a critical element in the sustainable and ethical development of AI.

The Expanding Universe of AI: Beyond the Internet’s Horizon

The current infrastructure of the internet, a marvel of interconnectedness, may soon face limitations as the demands of advanced AI, particularly AGI, continue to escalate. The sheer scale of computation, data transfer, and real-time processing required for truly general intelligence could strain, if not exceed, the current internet paradigm.

The Limitations of Current Internet Infrastructure for AGI

The internet, designed for human-to-human communication and information retrieval, might not be optimally structured for the massive, continuous, and ultra-low-latency data flows that an AGI system would necessitate. Imagine the constant, high-bandwidth communication required between myriad specialized AI modules, each operating on vast datasets and requiring near-instantaneous feedback. This could lead to bottlenecks and performance degradation that hinder the emergence and operation of AGI.

Specialized Networks and Edge Computing: Building New Pathways

To address these potential limitations, we are witnessing the development of specialized networks and the rise of edge computing. These are not merely incremental improvements but fundamental shifts in how data is processed and AI is deployed. Edge computing, which brings computation and data storage closer to the source of data generation, can reduce latency and bandwidth requirements, enabling faster, more responsive AI applications. This distributed processing model could be essential for managing the data demands of a future AGI.

Quantum Computing and AI: A New Paradigm of Processing Power

The advent of quantum computing represents a potentially paradigm-shifting development for AI. Quantum computers possess the ability to perform certain types of calculations exponentially faster than classical computers. This could unlock capabilities in areas like complex optimization, advanced simulation, and machine learning model training that are currently intractable. The synergy between quantum computing and AI could accelerate the development of AGI and lead to solutions for problems we can barely conceive of today.

The Data Deluge and the Need for Intelligent Data Management

The sheer data deluge we are experiencing requires more than just storage; it demands intelligent management. As AI systems become more sophisticated, they will need to not only access but also efficiently process, filter, and synthesize enormous volumes of data in real-time. This necessitates advanced data warehousing solutions, efficient indexing, and AI-driven data management tools that can keep pace with the demands of developing intelligence.

The Evolving Role of Regulation and Data Privacy

As AI’s capabilities grow and its societal impact becomes more profound, regulators are increasingly focusing on data privacy and AI governance. This growing attention signifies a critical juncture, where the ethical and legal frameworks surrounding AI development and deployment are being actively shaped.

The Global Push for Data Privacy Regulations

Inspired by frameworks like the General Data Protection Regulation (GDPR) in Europe, nations worldwide are implementing and strengthening data privacy laws. These regulations aim to give individuals more control over their personal data, ensuring transparency in its collection and use, and imposing stricter accountability on organizations that handle it. This trend is a direct response to the growing awareness of the potential risks associated with widespread data collection and AI processing.

AI Governance and Ethical Frameworks: Ensuring Responsible Development

Beyond privacy, there is a growing demand for comprehensive AI governance and ethical frameworks. This involves establishing guidelines and standards for the development, deployment, and oversight of AI systems to ensure they are fair, transparent, accountable, and beneficial to society. This includes addressing issues like algorithmic bias, job displacement, and the potential misuse of AI technologies.

The Challenge of Balancing Innovation with Protection

The challenge for regulators and policymakers is to strike a delicate balance between fostering innovation and protecting individuals and society. Overly restrictive regulations could stifle technological progress, while insufficient oversight could lead to unintended consequences and societal harm. Finding this equilibrium is crucial for ensuring that AI’s future is one of progress and shared prosperity.

The Future of Data Ownership and AI’s Ethical Compass

The ongoing discussions around data ownership will inevitably intertwine with the future of AI. How we resolve these fundamental questions about who controls and benefits from data will significantly influence the ethical compass of AI development. This includes exploring models of data trusts, cooperatives, and federated learning that empower individuals and communities.

The Internet’s Future: A Foundation for AGI or a Bottleneck?

The question of whether the current internet infrastructure is sufficient for the demands of AGI is a pressing one. The specialized networks and edge computing solutions mentioned earlier are not just optimizations but potential re-architectures of our digital landscape, designed to accommodate the unprecedented computational and data requirements of advanced AI. The evolution of the internet, therefore, is intrinsically linked to the realization of AGI’s potential, and it is a future we are actively building at revWhiteShadow. We believe that by deeply understanding these fifteen core ideas—the relay race of technologies, the critical importance of data and individual rights, and the evolving landscape of infrastructure and regulation—we can better navigate the transformative era of artificial intelligence and ensure its development aligns with human values and aspirations.